“AI is taking over the world, but I’m not ready for it,” is a sentiment I shared with you a few weeks ago. My apprehensions about generative AI haven’t changed since then, but as I heard the word “AI” being uttered for the 100th time during this week’s Google I/O, a quick realization dawned on me: I was OK with that. Maybe not as ecstatic as the hundreds of I/O attendees clapping and laughing like a ’90s sitcom track, but still, relatively OK and comfortable with Google’s approach to generative AI.

I’ve since rewatched the conference once more while rewinding specific moments many times over to analyze them. I’ve also given myself a couple of days to re-assess whether my rose-tinted Google glasses were affecting my judgment here. The result is I’m still generally comfortable with Google’s AI approach. Where OpenAI’s GPT4 presentation and Microsoft’s CoPilot launch left me in awe but also intimidated and concerned, Google’s take seems smartly selective and restrained.

The “responsible” AI message, which was hammered home, resonated with me. I am fully aware that Google came late to the generative AI table and that this concern for doing things right could be — and probably is — a perfect marketing spin to justify its delay and ease investors (who are digging this, by the way; Google’s stock rose from around $108 earlier this week to $118 at the time of writing and is at its highest since the summer of 2022), all while playing the good-guy role and getting the general public on its side.

I also realize that in an alternate universe, had Google pushed on with generative AI before ChatGPT‘s rise to fame, the roles might be reversed and it could be the one bulldozing through without any concern for responsibility or ethics, while other companies urged it to slow down. But we live in this reality and, maybe for the good of humanity as a whole, the biggest web company in the world has adopted* a responsible stance on AI and generative AI**.

*For now.

**In public.

What do you think of Google's generative AI approach so far?

8 votes

Google crafted a perfectly-balanced message about AI

The screenshot above shows 15 Google products that are actively used by more than half a billion — with a B — people and businesses. Of those, six products serve more than two billion. Google can impact a quarter of the world’s population in an instant, so imagine if that impact was nefarious.

That’s why I am a little admirative of how well crafted the I/O presentation and the message around responsible AI were. Throughout the two hours, Google maintained a masterful balance between:

we know we have the smarts and power, we’re aware this is scary, but look, it can also be fun! and incredibly useful, and we’ll hold your hand because we know it’s super intimidating to get started, and we’ll do our best to keep encouraging original web creators (because we rely on their data to farm it for our answers and ads, d’oh), though, you understand, we kinda have to compete with them and write good answers to searches, because otherwise, our product will appear useless to people, and yo, investors, we can do so much more, we’re just holding ourselves back now 😉, but here’s a small preview of more impressive things to come!Honestly, for the biggest web company in the world with such a weight on its shoulders, so many different actors to please, and so much tension to navigate, I think Google did the impossible here. Or maybe PaLM 2 did? Who knows who crafted that entire keynote at this point?

Google and generative AI: First, lure them in with familiarity and fun

As a regular web user, Google first eased me in with the innocuously fun and hand-holding aspects of its generative AI approach.

Features like Help me write in Gmail and Google Docs, Help me organize in Sheets, and Help me visualize in Slides put generative AI in a familiar context with clear directives. Unlike ChatGPT, Google Bard, or Bing Chat, you’re not staring at a blank canvas with no idea where to go. You have a springboard from which to launch your ideas while the AI has some boundaries to restrict itself to. There are even clear directives for personalizing the result to make generated texts shorter or more formal or change the styles of images, for example. Sure, that limits creativity and what you can do with this aspect of Google’s AI application, but I’m fine with that.

Instead of giving AI a blank canvas, Google has restricted it in many of its popular products.

Other fun implementations, like generative and emoji wallpapers and Magic Compose in Messages, are great, low-stakes ways for people to familiarize themselves with AI-generated content — and for Google to vastly enhance its algorithms and models, let’s be pragmatic.

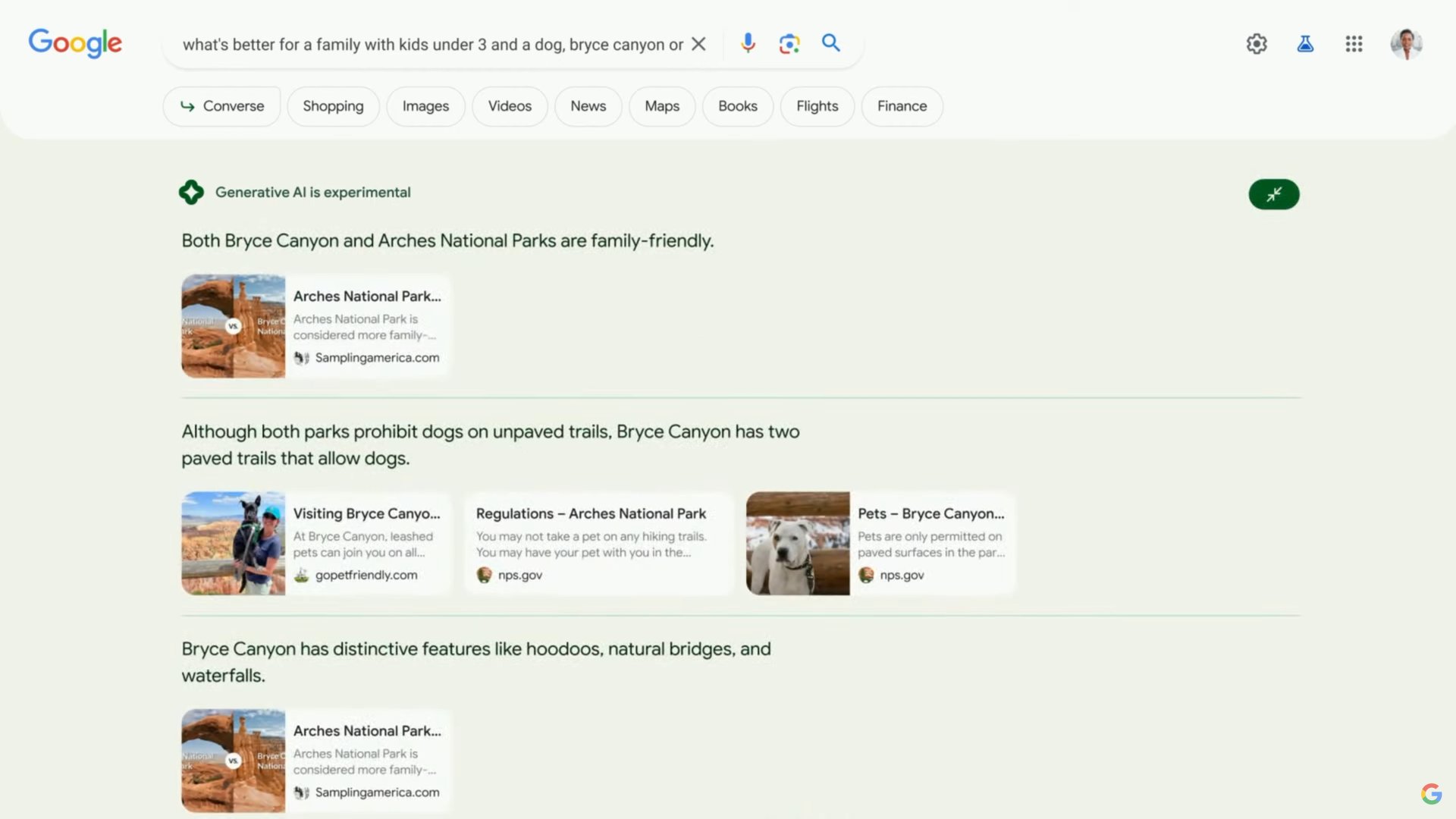

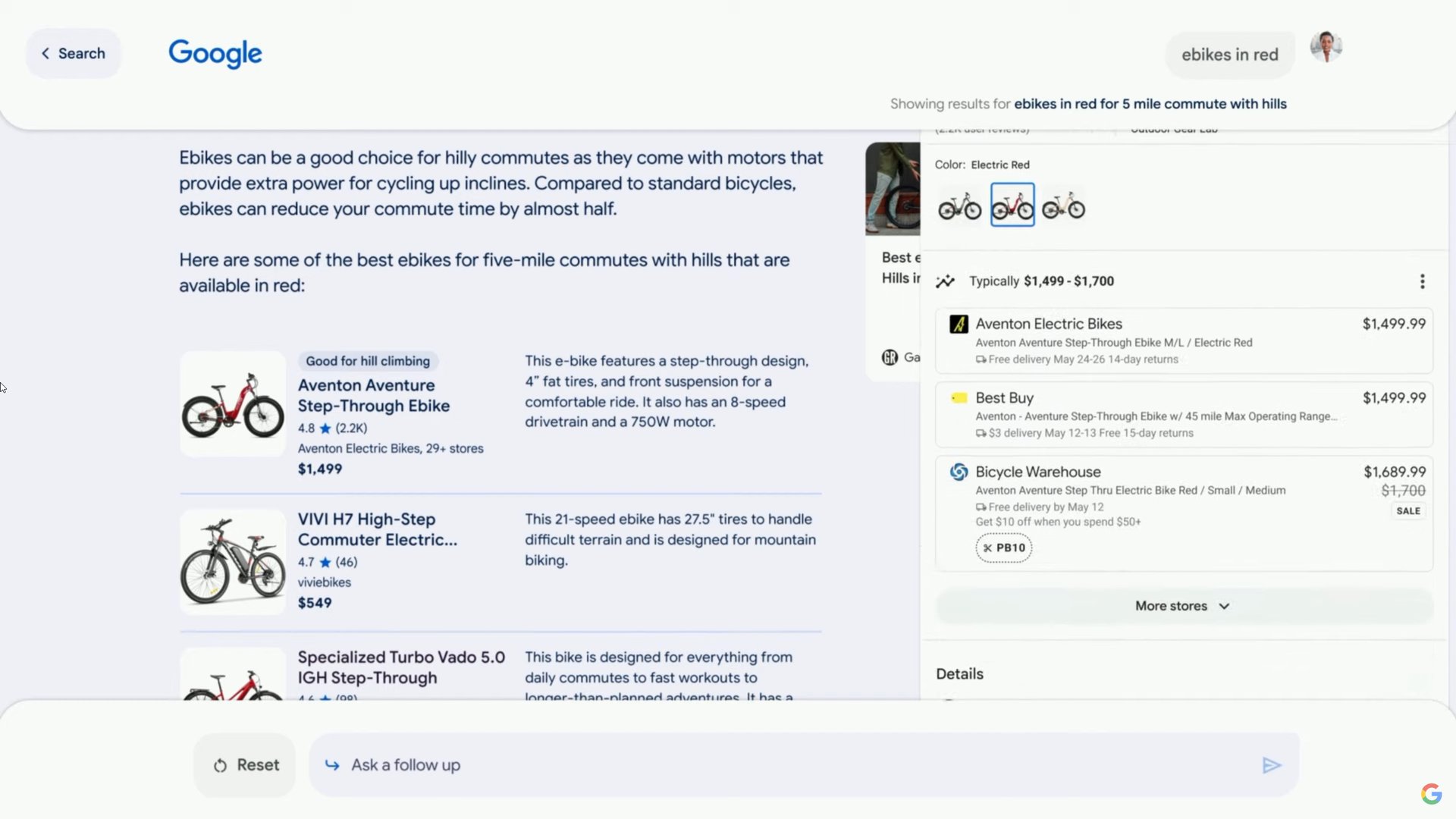

It was also easier for me to understand the usefulness and power of this kind of AI because Google applied it to products I already use every day. The new immersive View for Routes in Maps sounds incredibly useful when I’m planning trips to an unknown route or city, Project Tailwind would be an immense help for synthesizing information from dozens of my documents in Google Drive, and synthesized responses in Google Search should understand multi-criterion searches and save me hours of frantic browsing and manual comparisons.

I’m sure developers appreciated all the generative AI features made specifically for them too.

In many of these instances, the message was that AI-generated content and information are a good starting point, not a final product. That’s better than pretending AI in its current state can fully replace a writer, teacher, developer, doctor, or lawyer, when all it’s doing is collecting and organizing the information we humans have provided first. The only difference? It’s the fastest and most organized assistant ever.

Google’s AI approach is a little late, but also a little right

Besides the more obvious use cases, Google put forward less controversial applications of AI like helping developers with code writing and testing, and training fine-tuned datasets for threat analysis (Sec-PaLM) and medical research (Med-PaLM). It’s definitely easier for me to accept and perhaps embrace a predictive AI when I know it can help researchers build a more effective delivery method for cancer treatment.

Plus, there were many hints throughout the conference that showed Google has been — obviously, duh — watching the AI space closely, taking notes of pernicious issues, and thinking ahead about its responsibility as a web leader to do things right and how it can impact the future of AI positively.

AI applications in medicine, fake image watermarking, proper sourcing, and other measures are all good signs for the future of AI.

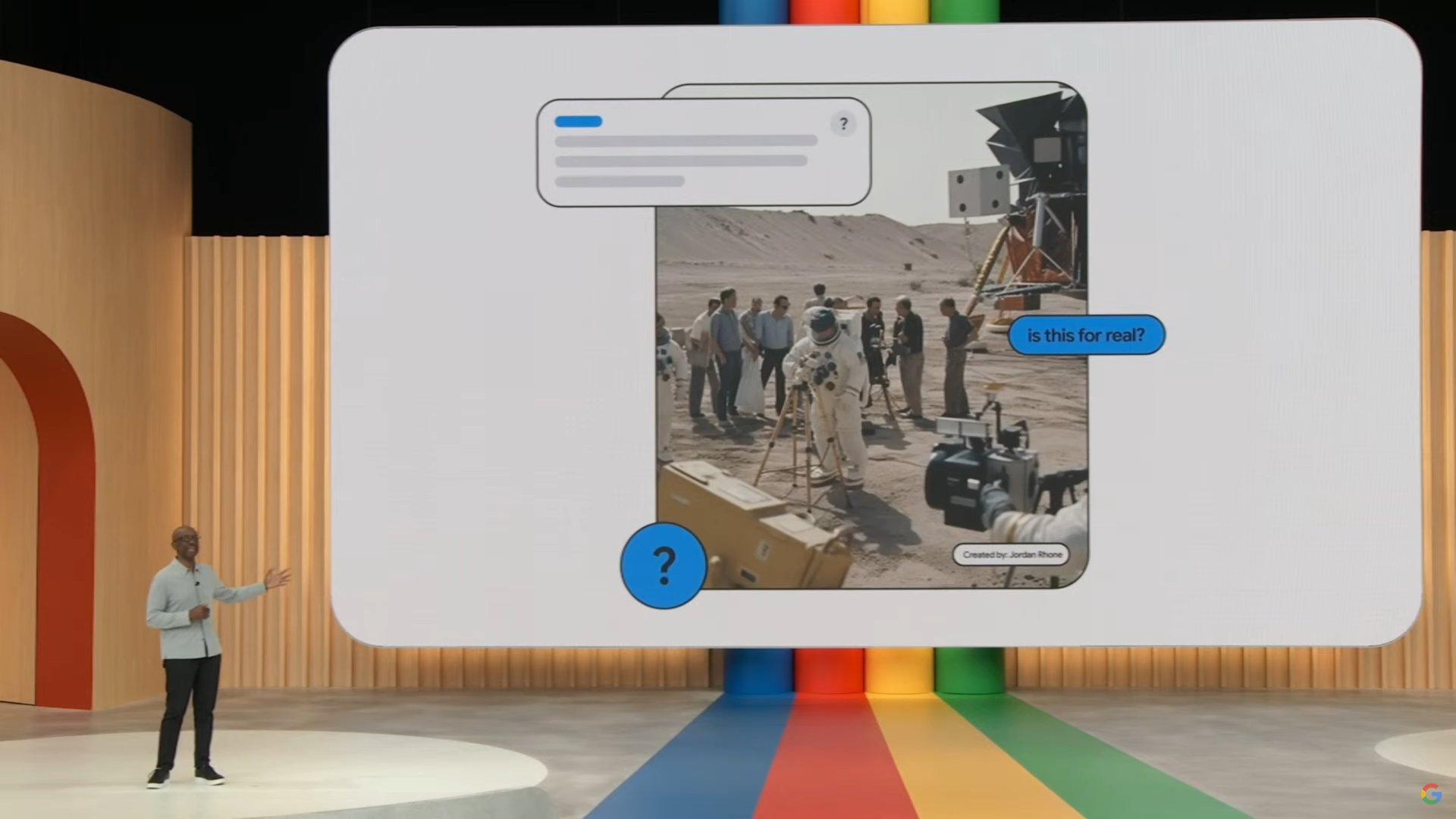

For example, if embraced by the rest of the AI players, watermarking and metadata for synthetic images should partly reign in misinformation and the dissemination of forged media. And fakes will be highlighted in Google Images’ About this image tags or through Google Lens detection.

Proper sourcing, be it from the web for searches, GitHub for coding, or your own documents and emails in Project Tailwind and Sidekick, is a good start. Is it enough to keep web creators well-fed and motivated as well as completely push misinformation away? I highly doubt it, but at least I’d be able to trace down many answers to their origin instead of blindly trusting it as I do with ChatGPT and other AI models now.

I was also pleasantly surprised to see Google mention its concerns of bias in AI, the importance of nailing different languages correctly before rolling them out willy-nilly, and its automated adversarial testing to reduce inaccuracies and prepare the AI for the million levels of hell that us humans will put it through.

Remember the early days of Bing Chat? Well, it’s better to be as ready as possible for all kinds of similar scenarios when two billion people get unfettered access to generative AI through Google Search.

So while Google is a few months late to the party, it has brought all its advantages to the ring: more users, more implementations, more investments, more developers, and more righteousness. As I mentioned earlier in the article, I’m glad we live in this version of reality, because if all of those had been used without the “responsible” ethos hanging above them, well,… I don’t know. And I’m glad we don’t have to find out.

Being late doesn't matter. The moment Google gets in the race, it has a headstart on everyone else.

The scary part is that Google doesn’t have a public headstart yet. As a matter of fact, most of its AI products aren’t live yet or are still in limited previews. But when it gets going, none of that will matter. It’ll be as if I’d been running a marathon at my snail’s pace of 5K per hour for six whole hours, then Eliud Kipchoge starts his race and catches up to me before I am anywhere near the finish line. With its ubiquity in our everyday lives, Google can catch up to the best players in seconds.

Its data will only get better with time because the more people use it — and there will be two billion right there in Search — the more accurate and nuanced it’ll get. AI needs training data and who has data? Google. Who can collect more data? Google.

Game over.

Exercising restrain while pushing the AI envelope

You’ve probably guessed from my writing so far that I’m teetering on the edge between naive fascination, resignation, excitement, and nervousness. But I’m still, at least for now, more at ease with Google’s approach to AI than OpenAI, Microsoft, Midjourney, or other players. Why?

I often got the sense that OpenAI and Microsoft’s approaches, for example, have been so fascinating, but also so rushed, chaotic, overwhelming, and scary. There’s a “tech bro” mentality of pushing fast, breaking things, and iterating faster, with complete disregard for consequences. I’ll leave this snippet of Michael Schwarz, Microsoft’s Chief Economist, for you to judge. Me? I’m thankful Microsoft doesn’t rule the world.

Don’t mistake this for blind trust in Google or hatred for competition — quite the contrary. I am all in for diversification and I think we need a bit of tug and pull, but having someone steer the ship and set a good standard is crucial.

And yes, I’m sure there are people at Google who think the same way as Michael, but I’m thankful that’s not the company’s public stance. Google appears to at least be asking itself, “We can, but should we?” And, “If so, how do we?”

Where OpenAI and Microsoft are moving fast and breaking things, Google appears to be exercising some restrain.

We could all glimpse signs of this restrain throughout I/O. For instance, Google is restricting its AI’s access where it could have the most impact, and giving it more freedom in products you have to actively seek. So while many of the new AI-based features will be siloed into specific popular Google products and use cases, users who don’t want to stifle their creativity or limit themselves can always go to the blank canvas of Bard and its PaLM 2 model. They can use its API and add its integrations to color outside the lines and build solutions tailor-made for them.

Then there were the teasers of more powerful things to come. The next-level Gemini model, Sidekick prompt-generator in Google Workspace, and Universal Translator were all expertly touted to appease investors then swiftly put back in the data center’s proverbial drawer until they’re ready and reliable. Another company with a tech-first approach would release some, if not all, of these as soon as possible and shrug the consequences off. I’m glad Google didn’t.

I lied, I still have apprehensions

My first and main question is: Where is the money?

We know ads are Google’s bread and butter and we saw glimpses of those through the sponsored product placement in Search but what else? This can’t possibly be the only monetization option. One generative AI query costs more processing power — and thus more money — than a simple search. So how is Google recouping that?

There are bound to be ads and sponsored results above the fold for each search. Does that mean those who pay get preferential treatment among the featured links? Will we find ads and recommended products in Google’s other AI implementations? I don’t want to see a calculator ad if I ask the AI in Sheets for help with a function!

Then there are questions that could alter the state of the web further, forever. Excellent sites and sources are already dying, if not dead. Good human web writers and journalists are becoming commodities for many publishers; their voices aren’t as heard and their work isn’t as appreciated. So will sites with excellent SEO optimization and poor content still win and get their results on top? Will AI-generated web content create a self-validating loop for featured snippets?

I'm very skeptical of AI monetization, ads, SEO gaming, and what those can do to the future of the web.

Imagine if one AI wrote web content, while another AI read it, parsed it, then featured it at the top of search results. That’d be a hell of a future. No humans needed. Where would the original data come from? Pure fabrication?

As a web writer and content creator on a site that lives and dies by its SEO and arbitrary Google rankings, I am also a million times worried about my career’s future. I can’t do anything about it, though, can I? The train has already left the station. I tell myself that, for now, I just have to focus on creating engaging and personal content (like this, hopefully?) and hope that’s enough to keep my voice above the fold or, preferably, in Google’s featured generative AI snippets.

From emoji wallpapers to altered realities, there’s a single step

Google started I/O 2023 with an impressive and fun demo of the upcoming Magic Editor in Photos. That set the tone for what was to come: a deluge of fascinating generative AI features that straddle the line between enhancement and fabrication, pushing the boundary of reality versus fiction and forcing me to move my own AI tolerance line a few notches ahead.

Announcement after announcement, it dawned on me that we can’t put the genie back in the bottle now. But if the genie has to be out, there are mostly right and mostly wrong ways to do it. For now, Google wins a skeptical nod of approval from me; this is the mostly right way I can get behind.

For now, Google wins a skeptical nod of approval from me, but I remain cautious of everything it chose not to say.

I remain cautious of everything Google chose not to say, though. For instance, framing image generation under the guise of fun wallpapers and innocuous Slides illustrations is a genius way to abolish concerns of fabricated realities and keep the AI model grounded in strict parameters. After all, an emoji wallpaper is a million times less scary than a fake but realistic-style “photograph” on Midjourney or other image generators. Many of us would be more willing to make a poop and ducks emoji wallpaper than put the Pope in a Balenciaga puffer jacket.

But is Google’s restrain a temporary illusion to avoid a million pitchforks at once? And will it watch us while we start embracing these fancy new AI features and lose track of our apprehensions, then start slowly pushing those boundaries further and further under the pretense of innovation? In other words, have we learned anything from Facebook?

.png)

Bengali (Bangladesh) ·

Bengali (Bangladesh) ·  English (United States) ·

English (United States) ·